Pattern Recognition

Aline Sindel

1. Abstract / Introduction

Given the large number of recurring image contents within the Luther portraits studied in the KKL, it is reasonable to assume that transfer methods were used in the Cranach workshop and beyond to reuse motifs both within a genre and across genres (e.g., prints to paintings or vice versa). In order to investigate the possibility of serial production methods, the corresponding groups of images are compared with each other. Due to the large number of images, tools using the latest approaches in pattern recognition and neural networks were developed to support individual steps of the similarity comparison.

The basic prerequisite to be able to compare the Luther portraits with each other is automatic image registration, which uses extracted features such as facial characteristics or crack structures to suitably superimpose the digital paintings and prints. In the form of automatic procedures, various groups of images were registered with each other according to specially defined criteria. Thus, a basis for similarity analysis was created. This includes, for instance, the scaling of all digital images according to their physical size to enable an absolute comparison between the images, or the serial registration of all prints of a motif to a reference image.

Also an important component are the visualization tools, with which the registered images can be inspected and visually compared with each other using various superimposition techniques to discover subtle differences. For the analysis of similar motifs, particular attention is paid to the comparison of facial features and outlines. For that, contour drawings can also be superimposed and their consensus visualized. For the similarity analysis, the tracings which were created directly on the physical object within the art technology subproject are supplemented by machine-generated contour drawings of the digital images.

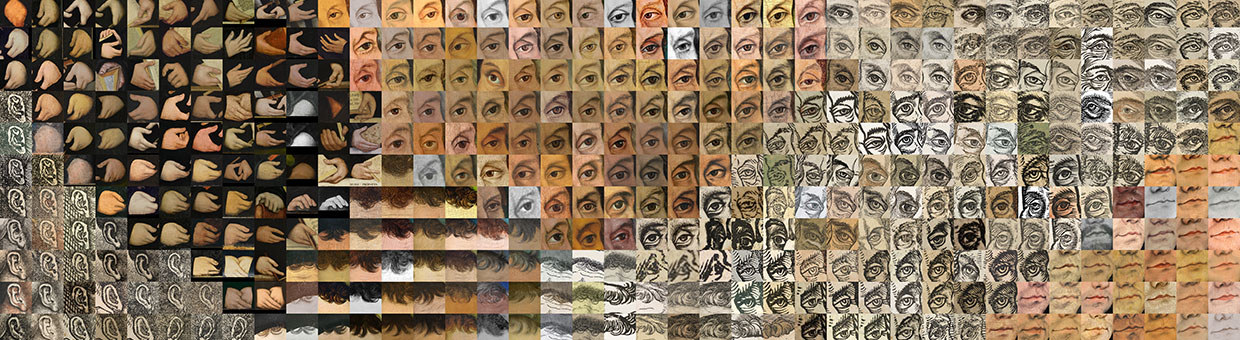

Another tool is the visual clustering of individual distinctive image patches such as eyes, nose or hair curl, which visually correlates the image patches according to their relative similarities to each other.

To support the similarity analysis of the prints, a tool for automatically marking subtle differences was created in addition to the visualization tools.

With an automatic method for the detection of chain line distances also a contribution is made to the analysis of the paper structure.

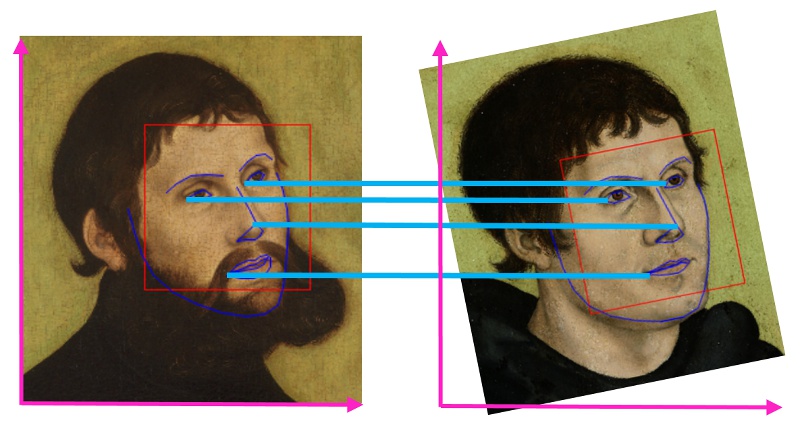

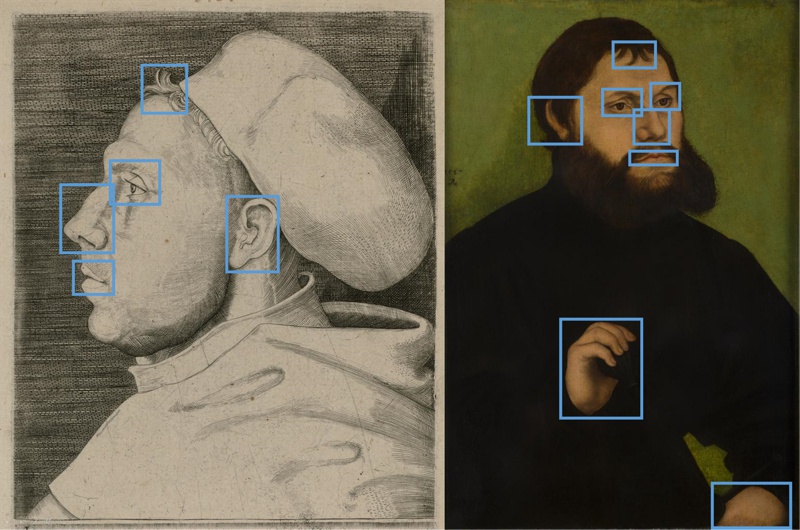

Fig. 1: Example of the registration of two portraits using four point correspondences. The drawn facial landmarks have been detected using dlib (http://dlib.net/face_landmark_detection.py.html).

Left: Lucas Cranach the Elder, Martin Luther as ‘Junker Jörg’ (Detail), Klassik Stiftung Weimar, Direktion Museen, No. G 9, (KKL-Nr. II.M2); Right: Cranach workshop or school, Portrait of Martin Luther as Augustinian Monk (Detail), Germanisches Nationalmuseum Nürnberg, on loan from Paul Wolfgang Merkel‘sche Familienstiftung, No. Gm1570, (KKL-Nr. I.6M1)

2. Image registration

Image registration is an image processing technique which computes a transformation between two images that can be used to map one image to the other. The schematic example in Fig. 1 shows the registration of two portraits using four point correspondences. In this case, the transformation between the two images is composed of rotation and translation to map the point pairs to each other (Fig. 1).

Image registration is crucial for the similarity analysis of Luther's images, as it allows the direct comparison of two or even more images.

For a high number of images, such as in case of prints where there are many copies of the same motif, semi-automatic methods such as manual point-setting in a Graphical User Interface (GUI) or manual balancing of images quickly become time-consuming and are generally less accurate than automatic machine-learning-based registration methods.

The image dataset of Luther images poses high challenges for the development of automatic robust registration methods. Differences in the digitization process such as different resolutions or camera settings and perspectives are to be mentioned here. The prints show signs of aging of the paper or print such as corrugations, deformations, and wear. In addition, there are production-related differences such as the varying thickness of the applied ink of the print. For art technological analysis, other imaging techniques besides visual photography are often used such as infrared reflectography, ultraviolet fluorescence photography, and X-ray radiography. The multi-modal images may differ in terms of their representational content, since depending on the technique, in some cases other materials may become visible, revealing additional details such as underdrawings or overpaintings.

The following is a brief summary of the image registration methods that were developed for this project.

Fig. 2: Multi-modal registration using CraquelureNet of the visual light image with the infrared reflectogram, ultraviolet fluorescence photography, and X-ray radiography: Keypoints and descriptors are extracted by the neural network and point correspondences are matched based on the similarity of the descriptors, which are used to compute a transformation. The registration results are visualized as false-color image overlays or as a linear blending between both images.

Multi-modal registration of paintings

Historical paintings usually show a fine network of cracks (craquelure) in the painting layer. Since the crack structures are visible with the help of the mentioned image acquisition techniques, they are suitable features for multi-modal image registration. In the CraquelureNet[1] registration method, a neural network is trained to detect striking positions in the craquelure ("keypoints") and describe them with feature vectors ("descriptors"), see Fig. 2. The descriptors are then used to determine keypoint pairs between the images. To obtain as many matched pairs as possible, the training process is formulated to achieve a higher similarity between descriptors of the same keypoint pair than between non-matched keypoint pairs (Fig. 2).

[1] Sindel et al. 2023.

Fig. 3: Registration based on facial landmarks: Results of the face detector, the facial landmark detector, and the registration.

Left: Workshop of Lucas Cranach the Elder, Martin Luther, Stiftung August Ohm, Hamburg, No. 2000-3a, (KKL-Nr. III.M-Sup01); Middle: Lucas Cranach the Elder, Martin Luther as Augustinian Monk with Order’s habit in front of a Niche (mirrored), Klassik Stiftung Weimar, Bestand Museen, No. DK 182/83, (KKL-Nr. I.2D1); Right: Tracing of the artwork: Lucas Cranach the Elder, Martin Luther, Kunstsammlungen der Veste Coburg, No. M417, (KKL-Nr. IV.M2a)

Cross-genre registration of paintings and prints

The registration of paintings and prints is very challenging due to their different representation techniques. Semantic image content such as facial features or contours can be used to register portraits of different genres.

The developed registration method based on facial landmarks consists of three steps (cf. Fig. 3): In the first step "Face Detection", the coarse face region is localized by a neural network. Then, within the face region, the neural network "ArtFacePoints" (see Sindel et al. 2023) is used to determine the exact position of the facial landmarks ("Facial Landmark Prediction"). Finally, the transformation is computed based on the landmarks of the two images (Fig. 3).

Fig. 4: Registration of a series of prints. Results of registered images to a reference image.

Multiple prints of: Daniel Hopfer, Martin Luther as Augustinian Monk with Order’s habit and doctoral cap, (KKL-Nr. I.4D3)

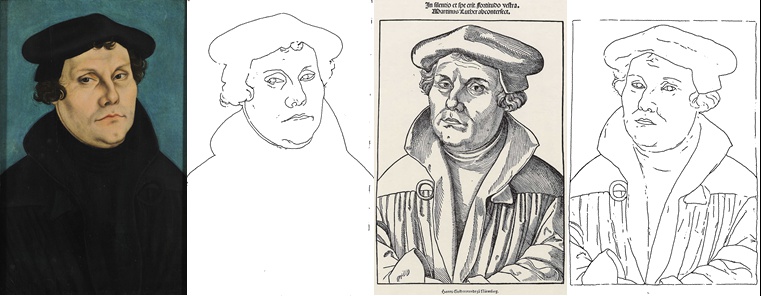

Fig. 5: Contour detection using Art2Contour of paintings and prints.

Left: Lucas Cranach the Elder, Martin Luther, Kunstsammlungen der Veste Coburg, No. M417, (KKL-Nr. IV.M2a); Right: Hans Brosamer (?), Martin Luther

Registration of a series of prints of the same motif

From some motifs of Luther's prints there are still several prints available, which show the same motif, but differ in their state of preservation. For an exact comparison of the prints, image registration is necessary. In the project, therefore, a group-by-group registration method was used, registering each image of the group to a reference image from the group (cf. Fig. 4).[1]

3. Contour detection

For the comparison of artworks with similar motifs, tracings of the paintings or prints are created by art technologists that contain only the outlines of the depicted content. Especially for the cross-genre comparison of paintings and prints, the similarities of the images are reduced to these outlines. Therefore, an automatic contour detection method called Art2Contour[2] was developed, which focuses specifically only on the essential common contours (cf. Fig. 5).

Art2Contour is a generative adversarial neural network (GAN) that learns to recognize the contours in the artworks through an interplay between two neural networks, the "generator" and the "discriminator." In the training process, the generator and discriminator compete. The generator generates a contour drawing based on an artwork. The discriminator receives both the real pair (real artwork and corresponding hand-drawn contour) and the artificially complemented pair (real artwork and corresponding generated contour drawing) and has the task of recognizing unreal image pairs as such. In this mutual training process, both networks become better and better at their task until, at the end of the training, the generator can produce realistic-looking contour drawings.

For the training and evaluation dataset, five hand-drawn contour drawings were annotated on touchpads for each image by different persons, as it depends individually on the person drawing how precisely the contour drawing is made and how many lines are used. For the assessment of the generator during training, the five hand-drawn contour images are compared individually as well as their consensus in contours with the generated contour drawing.

Fig. 6: ImageOverlayApp for the visualization of registered images using different overlay and blending techniques.

Hans Baldung Grien, Martin Luther as Augustinian Monk with Order’s habit, book and Holy Spirit as a dove, (KKL-Nr. I.3D1), Left: Germanisches Nationalmuseum, Nürnberg, MP14682 (DE_GNMN_MP14682_Overall-001); Right: Zentralbibliothek Zürich, III M 155,9 (CH_ZBZ_III_M_155_9_verso-recto)

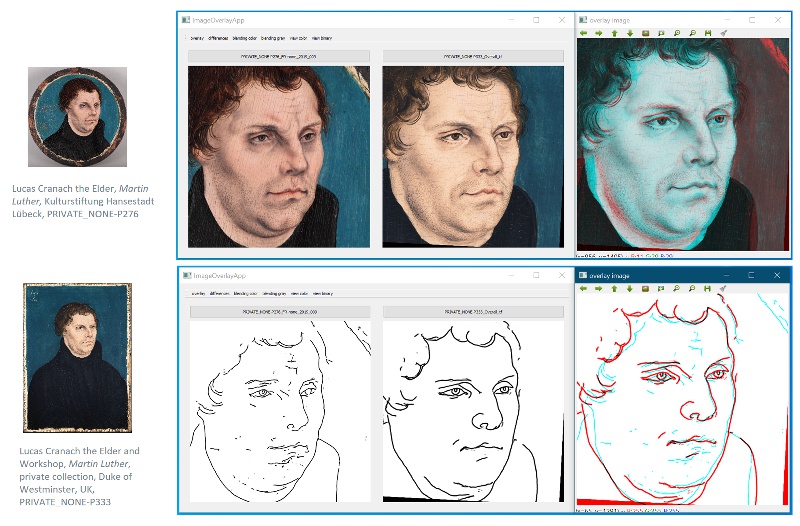

4. Visualization tools

Two visualization tools were developed as part of the project to analyze the images for subtle differences.

ImageOverlayApp

The ImageOverlayApp[1] is a Python GUI application that can be used to visually compare registered image pairs in various ways (see Fig. 6). Subtle differences between images can be inspected using the difference image, false color overlay (red-cyan), or by interactively blending between two images with a slider (Fig. 6).

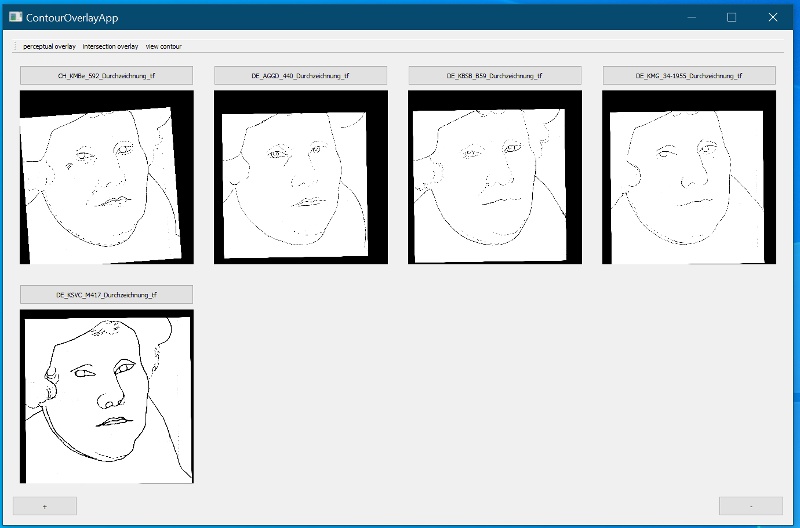

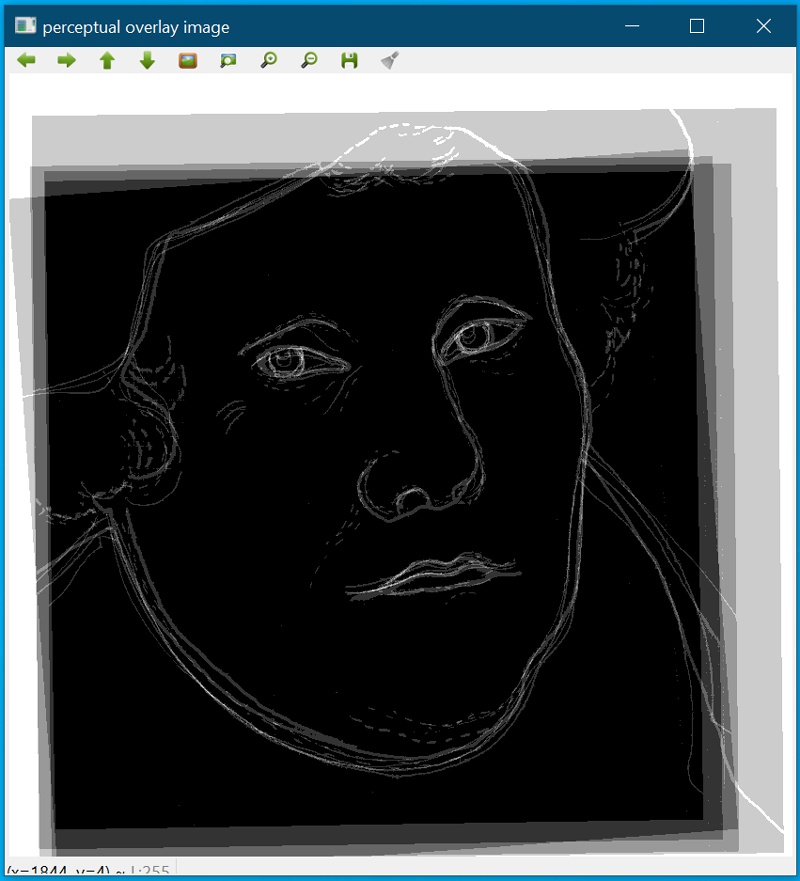

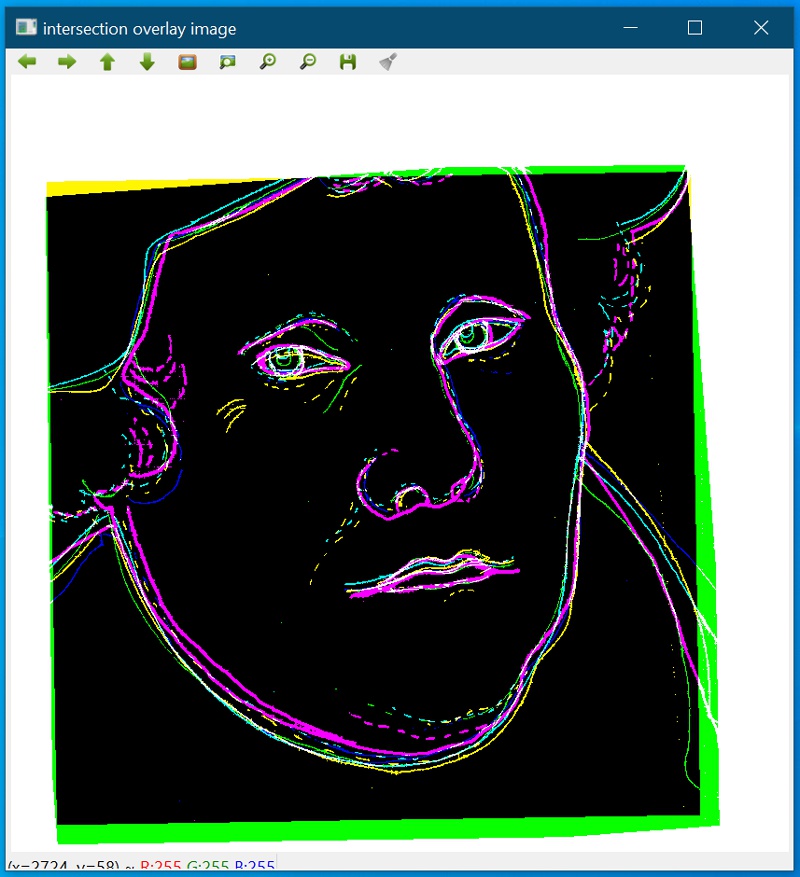

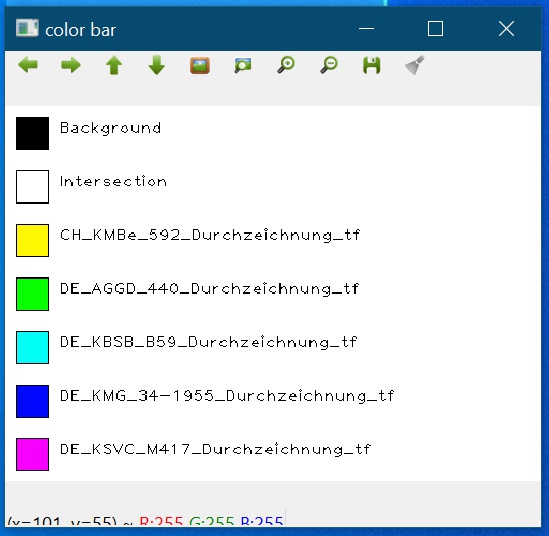

ContourOverlayApp

The ContourOverlayApp is a Python GUI application for visualizing any number of registered contour images (tracings or machine-generated contours e.g. using Art2Contour), cf. Fig. 7. The "perceptual overlay" calculates the overlay image of all contours, where all contours are displayed in white with relative transparency. The "intersection overlay" calculates the intersection areas of contours (intersection) and displays them in white. For each image, the contour area that has no intersection with another contour is assigned its own color of the HSV color space, so that the individual contours can be linked to the respective images (Fig. 7).

[1] Sindel et al. 2020b.

Fig. 7: ContourOverlayApp for the visualization of multiple contour drawings and accentuation of matches and mismatches in contours.

Tracings of different examples of the representatives of the portrait group IV.

Fig. 8: Size-dependent comparison of two Luther portraits and their machine-generated contours using Art2Contour.

5. Similarity analysis

The project investigated the extent to which very similar motifs differed and the form in which transfer procedures were used between images in a group but also across genres. To clarify this question, various pattern recognition methods and visualization tools were developed to facilitate the pairwise and groupwise comparison of images. Furthermore, certain image patches in the portraits were analyzed separately for similarity. To support the classification of prints within their group, a method was developed to automatically mark subtle differences between two prints.

Size-dependent contour and facial feature comparison (using absolute head size).

For the investigation of possible transfer methods, the absolute size of the portraits in relation to each other plays a role, since if contour lines were transferred directly from one image to the other, the size would remain constant.

Therefore, the Luther portraits were scaled according to their absolute size based on the width of the artwork measured manually in mm and the pixel distance of this width in the digital image determined in a semi-automatic tool. Then, the portraits were divided into groups according to ascending head size.

For the absolute comparison, the contour images via Art2Contour and the infrared images of the paintings rectified with CraquelureNet were used in addition to paintings, prints, and tracings.

All images per group were mapped to the reference image of the group using the facial landmark-based registration method, and only rotation and translation were included to determine the transformation. An example of the comparison of two Luther portraits is shown in Fig. 8.

Fig. 9: Size-independent comparison of a painting print pair and their machine-generated contours using Art2Contour.

Left: Workshop of Lucas Cranach the Elder, Martin Luther (Detail), Melanchthonhaus, Bretten, No. 1a (KKL-Nr. IV.M12a); Right: Georg Pencz, Martin Luther with beret and gown (Detail, mirrored), Minneapolis, Thrivent Collection of Religious Art, No. 01-02, (KKL-Nr. IV.D1)

Fig. 10: Automatic detection using Yolov4 of predefined object classes like eye, nose, mouth etc.

Left: Lucas Cranach the Elder, Martin Luther as Augustinian Monk with Order’s habit and doctoral cap, Kupferstichkabinett Dresden, No. A 5389 (KKL-Nr. I.4D1); Right: Workshop of Lucas Cranach the Elder, Martin Luther as ‘Junker Jörg’, The Muskegon Museum of Art, Muskegon, Michigan, No. 39-5 (US_MMA_39-5)

Size-independent contour and facial feature comparison.

In order to check whether congruent contour features could be found in images with different absolute head sizes, all source images of the previous comparison were registered to a common reference image based on their facial landmarks. In this case, the registration included rotation, translation, and scaling, so that a comparison independent of the absolute head size was possible (see Fig. 9).

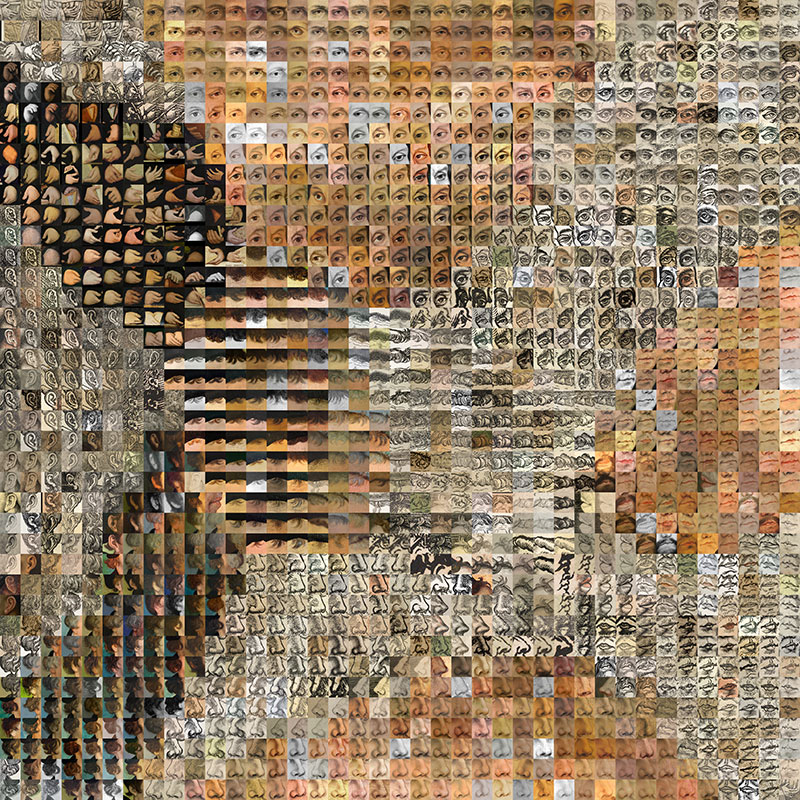

Clustering and similarity search of visually concise image elements in the Luther portraits.

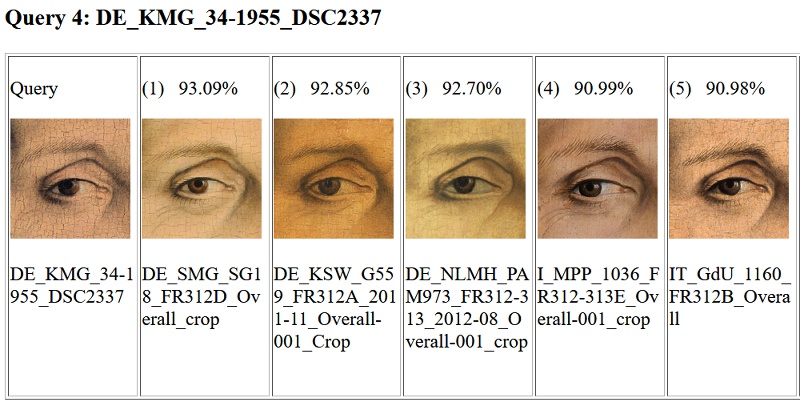

Another aspect of the similarity analysis of the project is based on the comparison of visually concise image elements in the portraits. For the automatic extraction of the image patches in the Luther paintings and prints, an object detector named Yolov4[1] was trained for the object classes eye, nose, mouth, ear, hair curl, and hands (see Fig. 10).

Each image patch can be described by a high-dimensional feature vector. To compute the feature vectors, a neural network is trained for the classification problem, which recognizes whether it is an eye, nose, mouth, etc. based on the image details.

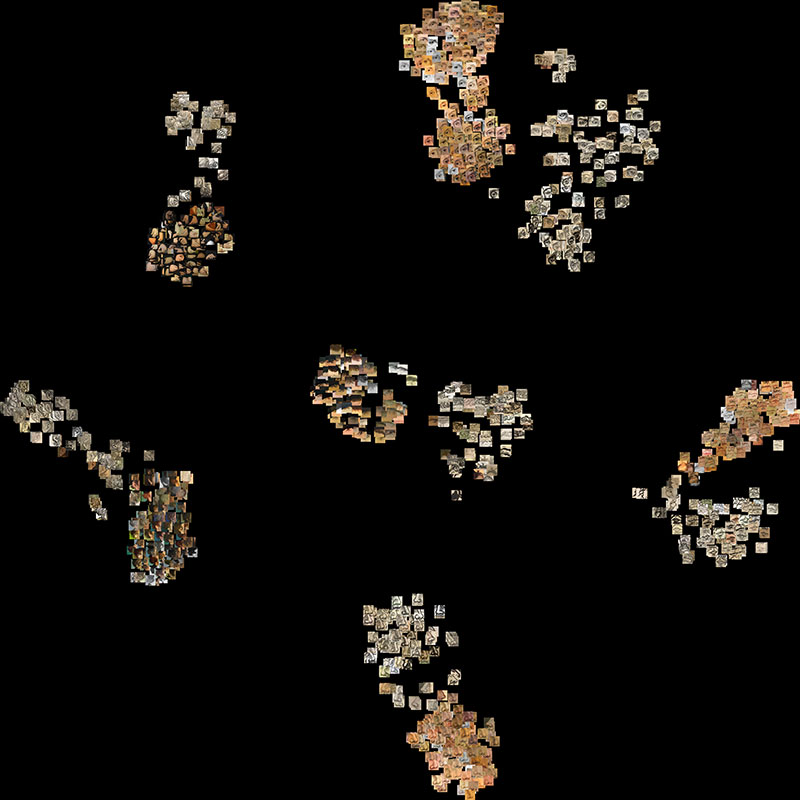

The "t-distributed stochastic neighbor embedding" (t-SNE)[2] method is used to cluster the image elements. t-SNE is a technique that can map high-dimensional data to a lower-dimensional space (2D in this case) and is therefore suitable for 2D visualization of relative similarities between objects.

Therefore, by projecting the high-dimensional feature vectors onto the 2D feature space using t-SNE, the relative similarities between the feature vectors can be visually represented and analyzed. Applied to all feature vectors of all categories, the t-SNE visualization (cf. Fig. 11a) shows clusters of the individual categories, which are further subdivided into the subgroups paintings and prints and various motif groups. For a more detailed inspection of the nearest neighbors in the similarity map, the clusters in Fig. 11b have been expanded in the visualization such that they fully fill the square and eliminate the distance between the clusters.

Fig. 11a: T-SNE of all features. Clustering of individual features and sub-grouping into paintings, prints, and different motif groups.

Fig. 11b: T-SNE of all features. Expansion of the clusters in the visualization to completely fill the image.

For a direct comparison of individual image elements, a similarity search was implemented, where the k-most similar image elements are searched for a source image ("query"). For this purpose, a neural network was trained, which calculates the cosine similarity of two feature vectors based on the principle of self-supervised learning. Self-supervised in this sense means that a copy of the training image is slightly modified using augmentation strategies (such as rotation, color or contrast change, or blurring) and it forms a very similar image pair together with the original training image. In the training process, the similarity network is optimized such that the feature vectors of the synthetically generated similar image pairs have high cosine similarity to each other. After the training is completed, the similarity network is used to compute pairwise similarity of all image elements of Luther images to each other. Figure 12 shows the search result for an example image of the category eye.

Fig. 12: Results of the similarity search separately for the category eye.

Different details of representatives of the portrait group IV.

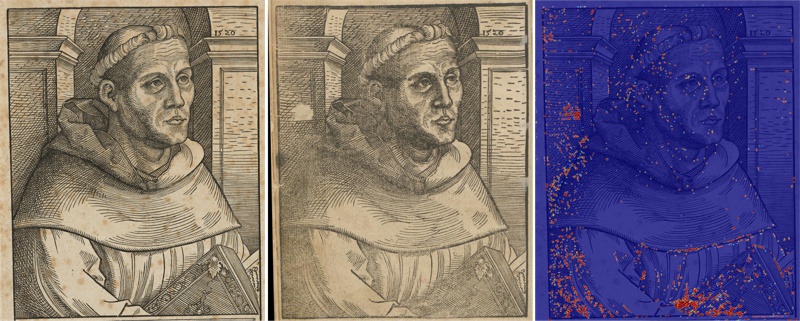

Fig. 13: Marked differences in two prints by a neural network.

Left: Unknown, Martin Luther as Augustinian Monk with Order’s habit and book in front of an arched niche with cornices and inscription (KKL-Nr. I.2D7), Lutherhaus, Wittenberg, No. 4° III 205 (DE_RFBW_LHW_III_205_2019-01); Right: Unknown, Martin Luther as Augustinian Monk with Order’s habit and book in front of an arched niche with cornices (KKL-Nr. I.2D6) (DE_UBR_Fg-1830-8)

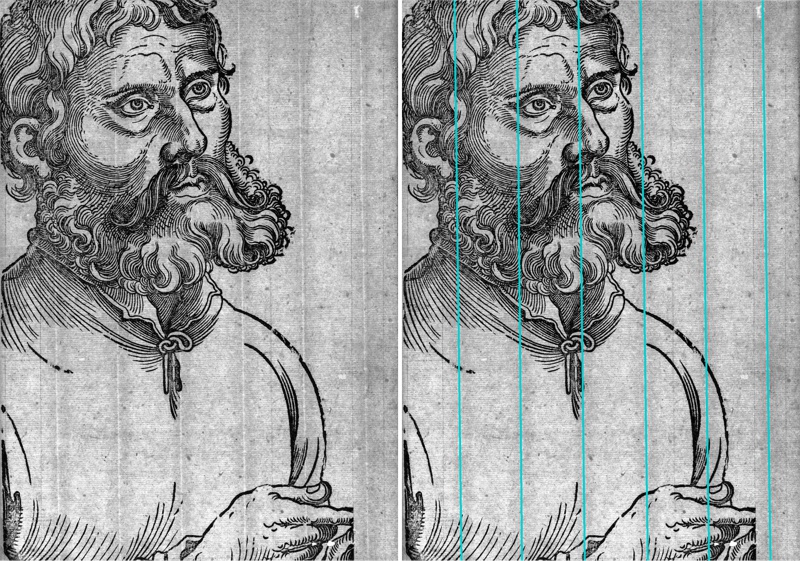

Fig. 14: Chain line detection using ChainLineNet in transmitted light images of prints.

Hans Sebald Beham, Luther as ‚Junker Jörg‘, Kunstpalast, Düsseldorf, No. KA (FP) 363 D, Transmitted light, (KKL-Nr. II.D3.2)

Differences in Prints

The similarity analysis of a series of prints of the supposedly same motif relies on finding subtle differences in the print, e.g. imperfections or changes in the printing plate or the printing block. To support the detection of these subtle differences, a method was developed that automatically marks positions of possible differences between two prints. For this purpose, all prints of a motif are first registered to a reference image and then pairwise difference positions are detected with a neural network (see Fig. 13, in which larger deviations are shown in red).

6. Chain line detection

In the art technology part of the project, the paper structure of the prints was analyzed and various metrics such as chain line distances were recorded. Chain lines are created as an imprint of the wire used for handmade papermaking. By measuring the distances between chain lines, a distance pattern characteristic of the wire that was used can be determined. If two papers have very similar distance intervals, this can possibly be traced back to the same wire. Manual readout of chain lines and chain line distances is very time-consuming, so automatic chain line detection is desirable as a support for art technological evaluations.

First, a neural network was trained on a small subset of the measured prints to automatically detect the chain lines in the transmitted light images and calculate the mean distances of the lines through further processing steps.[1] Detected lines that were quite far from the edge or had a steep slope compared to the other lines were marked as uncertain candidates such that art technology experts could sort them out or classify them as correct.

With ChainLineNet,[2] the accuracy in chain line detection and distance measurement was further improved on a much larger test data set. Compared to the previous method, which computes the exact line positions only in post-processing steps, ChainLineNet is an end-to-end trainable network that learns all steps from line detection to line parameterization, i.e., computing the parameters of the line equation, in training. An example is shown in Fig. 14.

7. Conclusion

The presented computer science methods were developed for the project "Critical catalogue of Luther portraits (1519–1530)", but they can be used for image analysis in general. They were designed to support the art technological and art historical analyses. By using the latest methods of pattern recognition and neural networks, new digital comparison possibilities could be created. This made it possible to register the large pool of images in a very short time and to increase the accuracy in the image analysis. With the help of the visualization tools, precise comparisons could be made and similarities and differences could be vividly displayed.